An important new mantra is search-driven applications. In fact, "search" is the new way of navigating through your information. In many organizations an important part of the business data is stored in SAP business suites. A frequently asked need is to navigate through the business data stored in SAP, via a user-friendly and intuitive application context. For many organizations (78% according to Microsoft numbers), SharePoint is the basis for the integrated employee environment.

Starting with SharePoint 2010, FAST Enterprise Search Platform (FAST ESP) is part of the SharePoint platform. All analyst firms assess FAST ESP as a leader in their scorecards for Enterprise Search technology. For organizations that have SAP and Microsoft SharePoint administrations in their infrastructure, the FAST search engine provides opportunities that one should not miss.

SharePoint Search

Search is one of the supporting pillars in SharePoint. And an extremely important one, for realizing the SharePoint proposition of an information hub plus collaboration workplace. It is essential that information you put into SharePoint, is easy to be found again. By yourself of course, but especially by your colleagues.

However, from the context of 'central information hub', more is needed. You must also find and review via the SharePoint workplace the data that is administrated outside SharePoint. Examples are the business data stored in Lines-of-Business systems [SAP, Oracle, Microsoft Dynamics], but also data stored on network shares.With the purchase of FAST ESP, Microsoft's search power of the SharePoint platform sharply increased. All analyst firms consider FAST, along with competitors Autonomy and Google Search Appliance as 'best in class' for enterprise search technology. For example, Gartner positioned FAST as leader in the Magic Quadrant for Enterprise Search, just above Autonomy. In SharePoint 2010 context FAST is introduced as a standalone extension to the Enterprise Edition, parallel to SharePoint Enterprise Search. In SharePoint 2013, Microsoft has simplified the architecture. FAST and Enterprise Search are merged, and FAST is integrated into the standard Enterprise edition and license.

SharePoint FAST Search architecture

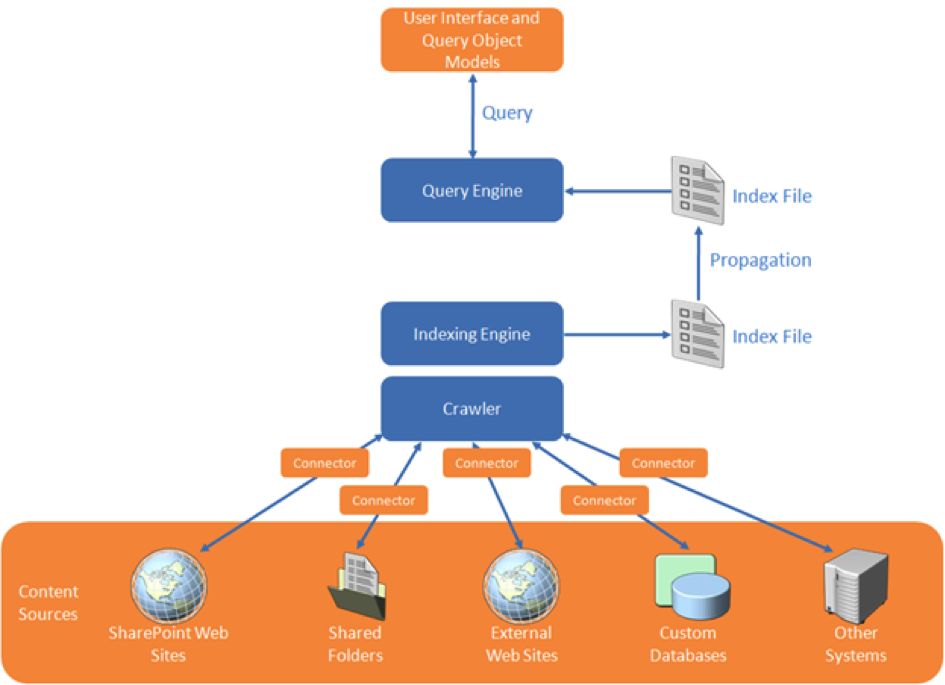

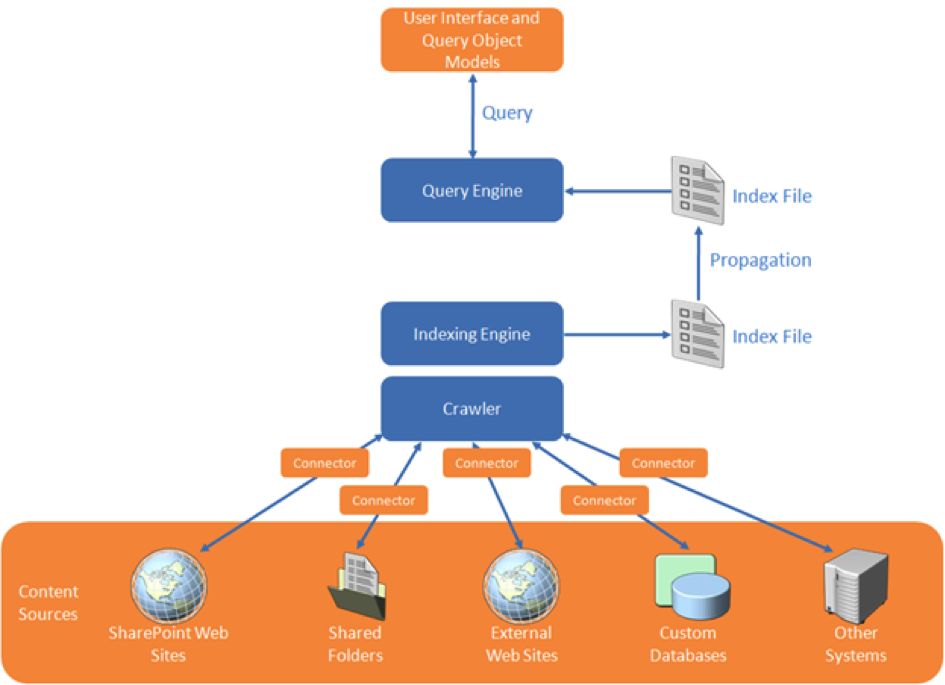

The logical SharePoint FAST search architecture provides two main responsibilities:

- Build the search index administration: in bulk, automated index all data and information which you want to search later. Depending on environmental context, the data sources include SharePoint itself, administrative systems (SAP, Oracle, custom), file shares, ...

- Execute Search Queries against the accumulated index-administration, and expose the search result to the user.

In the indexation step, SharePoint FAST must thus retrieve the data from each of the linked systems. FAST Search supports this via the connector framework. There are standard connectors for (web)service invocation and for database queries. And it is supported to custom-build a .NET connector for other ways of unlocking external system, and then ‘plug-in’ this connector in the search indexation pipeline. Examples of such are connecting to SAP via RFC, or ‘quick-and-dirty’ integration access into an own internal build system.

In this context of search (or better: find) in SAP data, SharePoint FAST supports the indexation process via Business Connectivity Services for connecting to the SAP business system from SharePoint environment and retrieve the business data. What still needs to be arranged is the runtime interoperability with the SAP landscape, authentication, authorization and monitoring. An option is to build these typical plumping aspects in a custom .NET connector. But this not an easy matter. And more significant, it is something that nowadays end-user organizations do no longer aim to do themselves, due the involved development and maintenance costs.

An alternative is to apply Duet Enterprise for the plumbing aspects listed. Combined with SharePoint FAST, Duet Enterprise plays a role in 2 manners:

(1) First upon content indexing, for the connectivity to the SAP system to retrieve the data. The SAP data is then available within the SharePoint environment (stored in the FAST index files). Search query execution next happens outside of (a link into) SAP.

(2) Optional you'll go from the SharePoint application back to SAP if the use case requires that more detail will be exposed per SAP entity selected from the search result.

An example is a situation where it is absolutely necessary to show the actual status. As with a product in warehouse, how many orders have been placed?

Security trimmed: Applying the SAP permissions on the data

Duet Enterprise retrieves data under the SAP account of the individual SharePoint user. This ensures that also from the SharePoint application you can only view those SAP data entities whereto you have the rights according the SAP authorization model. The retrieval of detail data is thus only allowed if you are in the SAP system itself allowed to see that data.

Due the FAST architecture, matters are different with search query execution. I mentioned that the SAP data is then already brought into the SharePoint context, there is no runtime link necessary into SAP system to execute the query. Consequence is that the Duet Enterprise is in this context not by default applied.In many cases this is fine (for instance in the customer example described below), in other cases it is absolutely mandatory to respect also on moment of query execution the specific SAP permissions. The FAST search architecture provides support for this by enabling you to augment the indexed SAP data with the SAP autorisations as metadata.

To do this, you extend the scope of the FAST indexing process with retrieval of SAP permissions per data entity. This meta information is used for compiling ACL lists per data entity. FAST query execution processes this ACL meta-information, and checks each item in the search result whether it allowed to expose to this SharePoint [SAP] user.

This approach of assembling the ACL information is a static timestamp of the SAP authorizations at the time of executing the FAST indexing process. In case the SAP authorizations are dynamic, this is not sufficient.

For such situation it is required that at the time of FAST query execution, it can dynamically retrieve the SAP authorizations that then apply. The FAST framework offers an option to achieve this. It does require custom code, but this is next plugged in the standard FAST processing pipeline.

SharePoint FAST combined with Duet Enterprise so provides standard support and multiple options for implementing SAP security trimming. And in the typical cases the standard support is sufficient.

Applied in customer situation

The above is not only theory, we actually applied it in real practice. The context was that of opening up of SAP Enterprise Learning functionality to operation by the employees from their familiar SharePoint-based intranet. One of the use cases is that the employee searches in the course catalog for a suitable training. This is a striking example of search-driven application. You want a classified list of available courses, through refinement zoom to relevant training, and per applied classification and refinement see how much trainings are available. And of course you also always want the ability to freely search in the complete texts of the courses.In the solution direction we make the SAP data via Duet Enterprise available for FAST indexation. Duet Enterprise here takes care of the connectivity, Single Sign-On, and the feed into SharePoint BCS. From there FAST takes over. Indexation of the exposed SAP data is done via the standard FAST index pipeline, searching and displaying the search results found via standard FAST query execution and display functionalities.

In this application context, specific user authorization per SAP course elements does not appy. Every employee is allowed to find and review all training data. As result we could suffice with the standard application of FAST and Duet Enterprise, without the need for additional customization.

Conclusion

Microsoft SharePoint Enterprise Search and FAST both are a very powerful tool to make the SAP business data (and other Line of Business administrations) accessible. The rich feature set of FAST ESP thereby makes it possible to offer your employees an intuitive search-driven user experience to the SAP data.