Duet Enterprise natively only supports flattened SAP data entities. Typically however business data exhibits a complex structure. Via custom programming against the SharePoint BCS ObjectModel it is possible to integrate complex SAP business entities via Duet Enterprise in SharePoint.

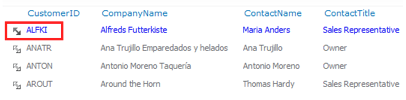

A sales talk of Duet Enterprise is that you can integrate with minimal effort SAP data and functionality in SharePoint UI. This however is only true if your SAP data complies to the following constraints: 1) the SAP data entities must at least be made accessible via a Query and ReadItem operation; 2) in case of full update context, also the Create, Update and Delete operations must be available in the SAP data entity; 3) the SAP data entity must exhibit a flat data structure.

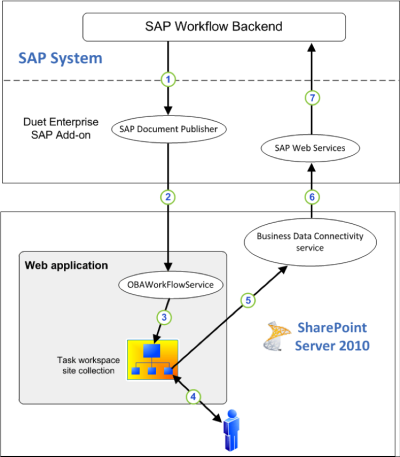

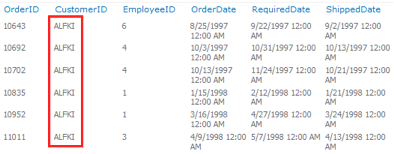

Constraints 1 and 2 are imposed by the usage of SharePoint Business Connectivity Services (BCS) for working with SAP external data from within SharePoint context. As result of the central BCS role in the Duet Enterprise architecture, these constraints are inevitable. Constraint 3 is required to visualize the SAP data via SharePoint External List in the well-known UI List format. Aspect of the list UI metaphor is that it resembles a data table, with row-based data.

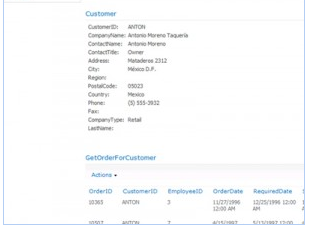

Problem however is that typically, enterprise data is non-flattened. Enterprise data often has an hierarchical structure: parent with multiple child data entities. E.g. an Order containing multiple Orderlines; an Expense form with multiple individual ExpenseDetails. As result of constraint 3, it follows that such complex data cannot be visualized in SharePoint UI via the out-of-the-box External List. Does this mean that SAP complex business entities thus cannot be integrated within SharePoint UI context via Duet Enterprise? The answer to that is negative. The applied Duet Enterprise architecture itself, with SharePoint BCS and the SAP Service Consumption Layer (SCL) in the middle, has neither restrictions nor problems with interoperating on and exchanging hierarchical SAP data. It is merely the External List concept that cannot visualize complex data (note: this limitation is generic, thus not only for SAP data structures, but also non-SAP business data structures; as Oracle, Microsoft Dynamics, SQL-based, WCF data objects, …). You can still apply Duet Enterprise as SAP / SharePoint integration foundation in case of complex SAP data structures, but it requires you to build a custom UI in SharePoint instead of the out-of-the-box External List concept. Nothing withholds you from constructing a custom SharePoint UI to display the hierarchical data, e.g. in a master-slave UI concept.

Well, not entirely true…; a noticeable problem with building a custom UI to interoperate Duet Enterprise, is that the custom UI must then program against the BCS ObjectModel API. And the BCS OM currently only provides a [very] weakly typed programming model, instead of the strong-types we are used to in a regular .NET context (e.g. WCF data objects plus WCF interfaces). The explanation for this is that BCS is a generic concept, intended to interoperate against arbitrary external data repositories and various and a priori unknown data structures. In its current stadium, the mapping at .NET client side to the concrete external data structures is enabled via a general purpose ‘BCS operation language’. The problem with that BCS language is that there is no compile-time checking nor support to prevent you from making typo or structure mistakes.

An example interoperating complex SAP data into SharePoint

Via an example I will demonstrate in overview what it takes to interoperate complex SAP data via Duet Enterprise into a SharePoint front-end.Example

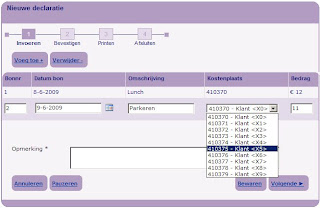

Employee Self-Service process for expense handling. The process consists of multiple steps: it starts when an employee submits an expense form for receiving a refund; next the employee’s manager will review the form, and either approve, deny, or return it for further explanation. In case of approval, the finance department will refund the made expenses. In case of return, the employee can augment the expense form with additional remarks, and then resubmit. Or the employee can decide to withdraw the returned expense form. In case of denied expense, the process directly stops. Each process execution ends with archival of the submitted form and other documentation.Current, this process is already implemented within the SAP environment. Employees can enter a form via a WebDynpro form; which kicks of a SAP workflow process. The manager receives the review task in this workflow within the SAP Universal Work List. Payment is done via the HR business package.

Although thus the expense process is already implemented in the IT landscape, management feels the need to improve on it. Employees are complaining about the WebDynpro UI, which looks and behaves different as the SharePoint based intranet in which they are performing their primary work activities. The managers on the other hand are perfectly satisfied to perform the review task within the SAP GUI, since this is for them also for most other tasks the familiar work environment. The financial handling is mostly automatic performed in the SAP backend, near to the other financial processes; so neither any reason to change on that. The management therefore decides to bring the employee’s involvement in the process into the context of the SharePoint based intranet, while leaving the rest of the process execution untouched (phase 1).

Step 1: derive the requirements and the functional + process requirements

Since the process is already implemented; this step can largely reuse on what is already available qua system and process specifications.Step 2: derive the integration and software architecture

The project goal is enabling employees to operate their steps in the expense handling process directly within the SharePoint intranet, with the same familiar UI look & feel as other parts in that intranet. Implication of that is to interchange the WebDynpro front-end for a SharePoint front-end. Via well-derived integration points this new front-end must hook into the existing expense process.Step 3: define the interoperability interface of the SAP backend

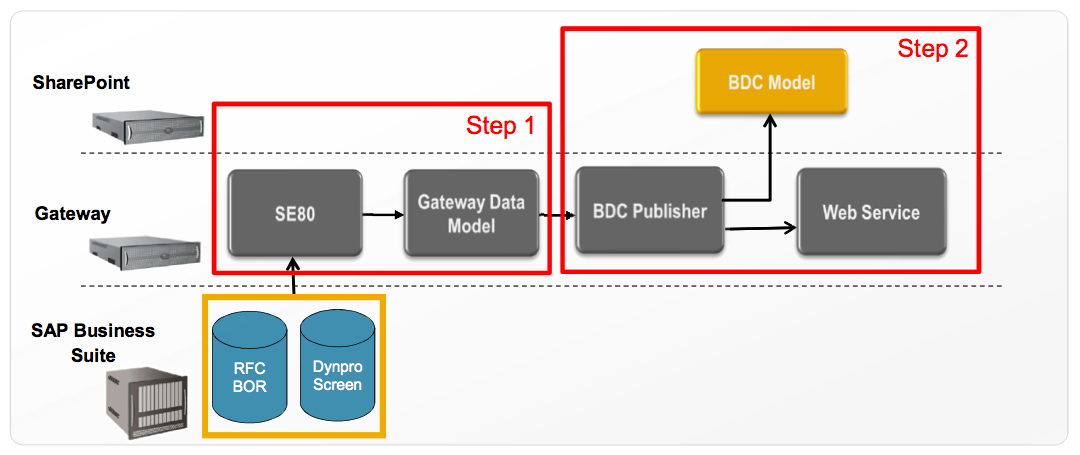

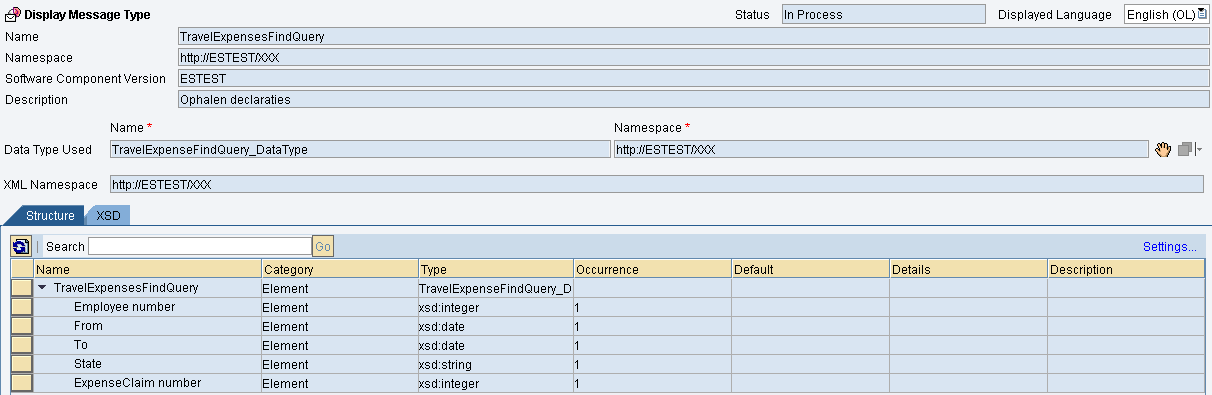

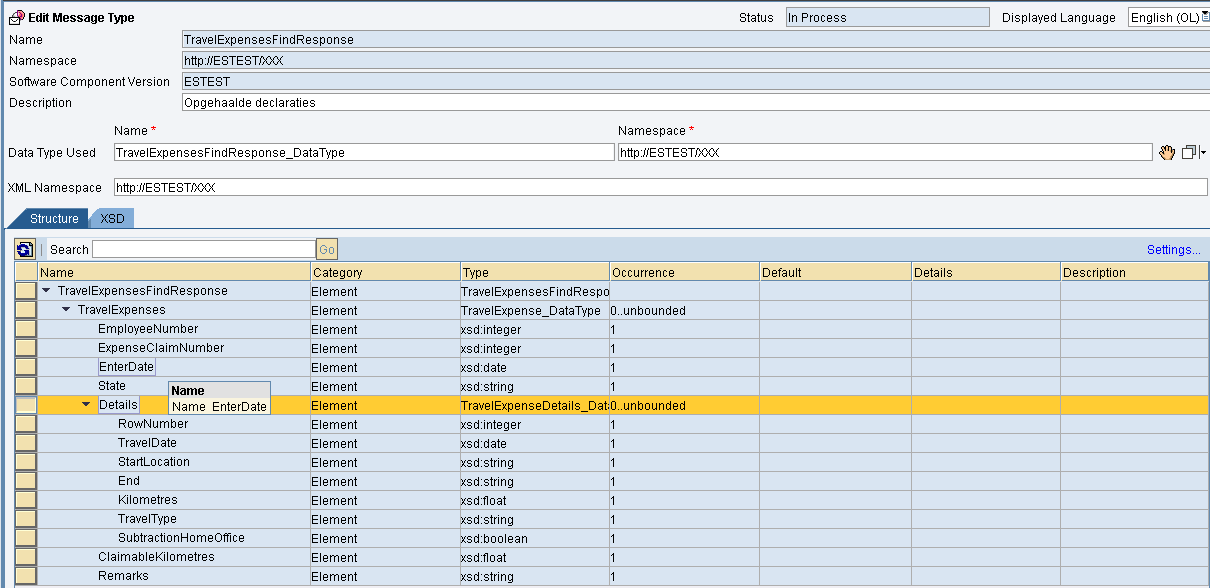

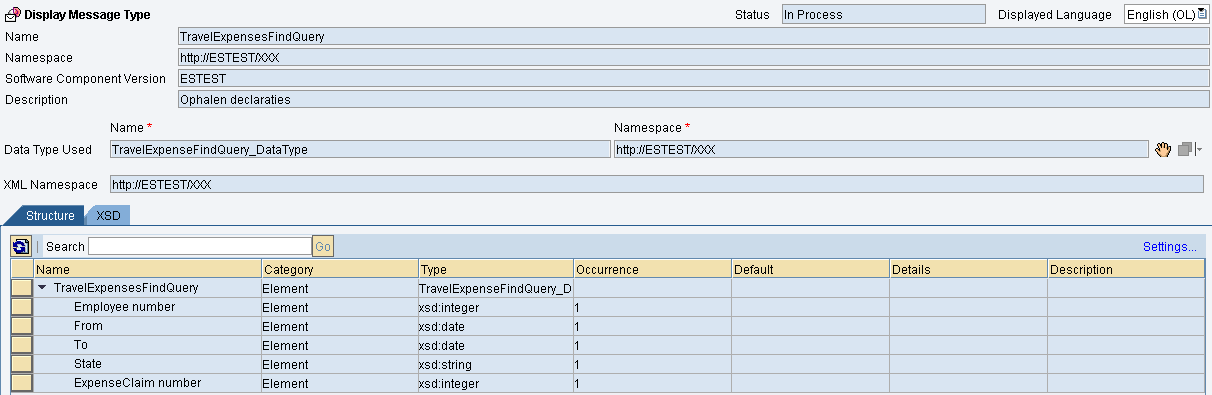

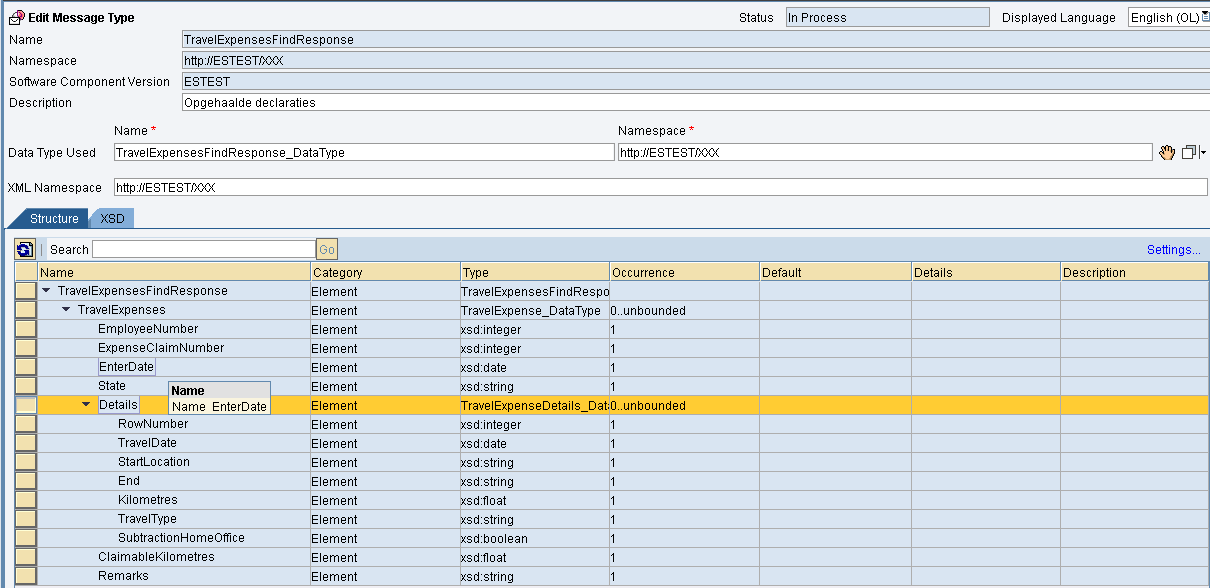

With the integration and software architecture in place, including the conceptual specifications of the integration interfaces SharePoint front-end <– SAP process; the next step is to realize the process integration at SAP side. Following the Duet Enterprise Development Steps, this first means to map the conceptual interfaces onto SAP SCL Interfaces. That development activity is done in SAP Enterprise Service Builder (ES Builder). There, you specify the data types, message types, and method operations.Screenshots of the specification at SAP side of a Query operation signature, with a complex hierarchical SAP data entity

Step 4: Implement the SCL Interfaces

Actually, this is more than one single step. It involves Mapping, connecting to relevant parts in the SAP backend, routing, potentially composition of multiple SAP data entities, et al. Although all very interesting, this has no direct relation or influence on the way complex SAP data can be handled in the SharePoint front-end. Therefore these steps are not discussed here. See the

Duet Enterprise Development Guide for a proper explanation of the steps.

Step 5: Generate the interoperability interface for the SharePoint front-end

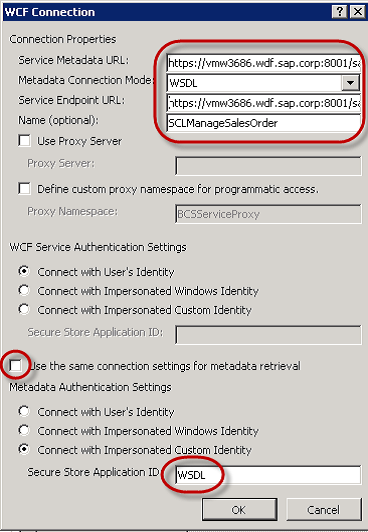

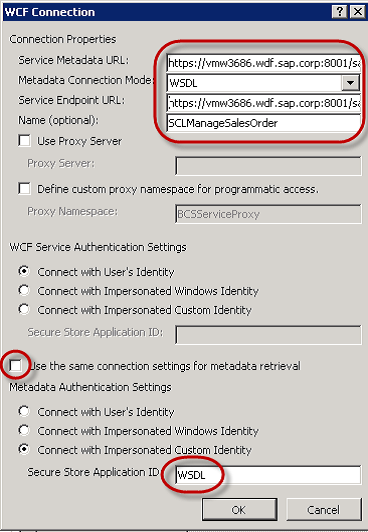

A result of step 3 is the WSDL specification of the SAP SCL interface. With this WSDL, and a runtime SAP Proxy implementation, it is possible to generate an External Content Type. The tool used for this is SharePoint Designer 2010.Screenshots of the generation at SharePoint side of an External Content Type to SAP backend, with a complex hierarchical SAP data entity exchanged

Step 6: Program to the interoperability interface for the SharePoint front-end

In case the SAP data entity had a flat representation, we would be nearly finished. Just generate an External List in SharePoint Designer or via the SharePoint UI, and connect to the generated External Content Type. However, the expense data entity at SAP side exhibits a complex structure: form with multiple detail lines. This cannot be reasonable visualized in the table-oriented format of External List (remember that the start of this project is to improve on the user experience for the employee; so it must feel natural and intuitive). If the External List is not feasible, then it is required to build a custom UI in SharePoint context. The custom UI must interoperate against the BCS ObjectModel API, to query, retrieve, create and update the SAP expense data entities. In its current state, the BCS API can only be operated via a weakly-typed program model. The draw side is that programming at that low-level is both cumbersome as error prone. However, if done correctly and secure, it does the job. And enables you to interoperate complex SAP data entities via Duet Enterprise in a custom SharePoint / .Net context.Example code of interoperating BCS to create a new expense entity within SAP backend

BdcService service = SPFarm.Local.Services.GetValue(String.Empty);

IMetadataCatalog catalog = service.GetDatabaseBackedMetadataCatalog(SPServiceContext.Current);

IEntity entity = catalog.GetEntity("TopForce.com", "Expense");

IView createView = entity.GetCreatorView("Create");

IFieldValueDictionary methodFields = createView.GetDefaultValues();

methodFields["EmployeeNumber"] = "1";

methodFields["Status"] = "open";

methodFields["EmployeeComment"] = "my comment";

methodFields["Date"] = DateTime.Now;

object tickets = methodFields.CreateCollectionInstance("Tickets", 2);

methodFields["Tickets"] = tickets;

methodFields["Tickets[0]"] = methodFields.CreateInstance("Tickets[0]");

methodFields["Tickets[0].Date"] = DateTime.Now;

methodFields["Tickets[0].Amount"] = "35.45";

methodFields["Tickets[0].Description"] = "Description 1";

methodFields["Tickets[1]"] = methodFields.CreateInstance("Tickets[1]");

methodFields["Tickets[1].Date"] = DateTime.Now;

...

Identity id = entity.Create(fieldValueDictionary, LobSysteminstance);

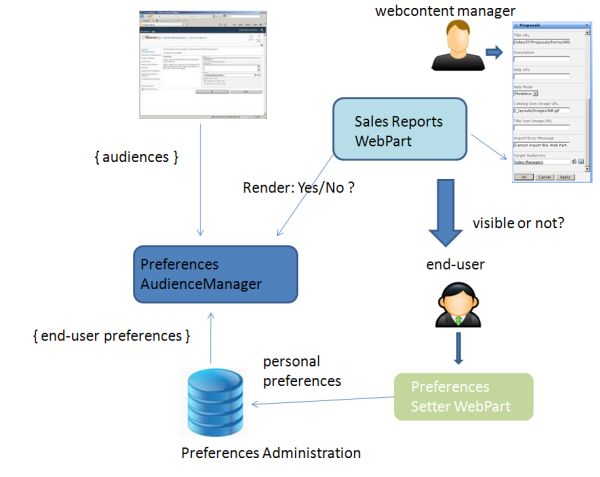

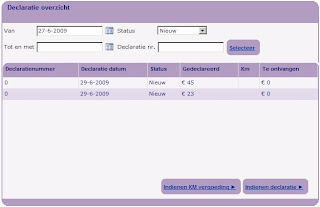

Step 7: Build an UX custom SharePoint front-end

With the integration layer SharePoint/BCS - Duet Enterprise - SAP ready, it is now rather standard ASP.NET programming to build the custom UI. In the custom UI you can utilize the full toolbox of ASP.NET webcontrols, jQuery, and even Silverlight. Another viable option in SharePoint 2010 is InfoPath based forms.Final note

Thus although it requires more work – at the SharePoint side; it is possible to apply Duet Enterprise for SAP / Microsoft interoperability even when dealing with complex SAP data entities. The extra work is restricted to the SharePoint front-end part; the other parts of the Duet Enterprise pipeline are not influenced. Duet Enterprise on itself does not limit the usage of complex SAP data entities; this restriction is merely within the current out-of-the-box capabilities of SharePoint BCS and External List.